Imagine you’re at trivia night. Someone asks about the latest Nobel Prize winners. You probably wouldn’t just guess. Instead, you’d pull out your phone, search for the answer, and then share it with confidence.

That’s exactly what a RAG pipeline does for AI. And it’s changing everything. In fact, the numbers tell the story. The RAG market grew from $1.2 billion in 2024 and is projected to reach $11.0 billion by 2030, increasing at a massive 49.1% annually. That’s not hype, it’s transformation.

The Problem: When Smart Isn’t Enough

Large Language Models are impressive. They can write poetry, debug code, and even explain quantum physics. However, there’s a major limitation. They work from memory, specifically, from what they learned during training.

Ask about yesterday’s news? They can’t help. Need information from your internal files? No luck there either. It’s like having a brilliant coworker who never reads emails or news. Smart? Yes. Up-to-date? Not at all.

This is where RAG pipelines make a difference. They give AI the ability to “look things up.” Today, 51% of enterprises use RAG architecture, up from just 31% last year.

What RAG Really Means

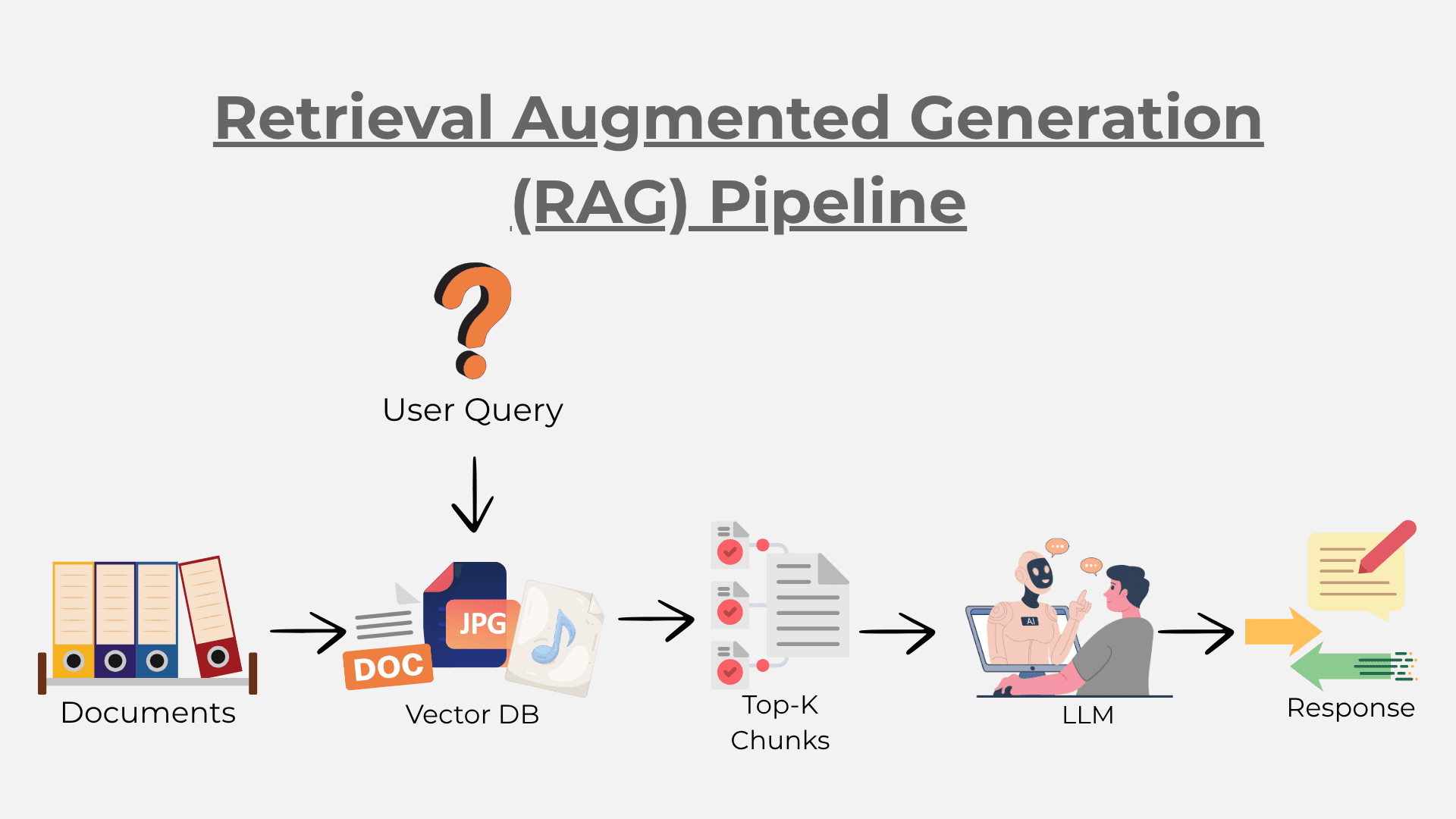

RAG stands for Retrieval-Augmented Generation. It combines two powerful capabilities: knowledge retrieval and intelligent response generation. Think of it as giving your AI both a brain and a library card.

So what is RAG in artificial intelligence? It mirrors how humans research. When you face a question you’re not sure about, you don’t guess. You research first. You check Google. You flip through books. You ask experts. Then you answer based on fresh, verified knowledge.

A RAG pipeline works the same way—but at machine speed. Because the cost of generating an AI response has dropped dramatically (over 1,000x in two years), real-time RAG systems are now affordable for everyday business use.

How Does RAG Work?

The RAG pipeline follows a simple two-step process:

Step One: Retrieval

You ask a question. The system searches through documents and finds the most relevant information. This may include company policies, news articles, product manuals, or research files.

Step Two: Generation

Next, the AI takes what it found. Instead of copying and pasting, it understands the context and crafts a meaningful answer.

In short: search first, answer second—always grounded in facts.

What RAG Pipelines Can Do for Your Business

A RAG pipeline is more than clever technology. It solves real, everyday problems. Major companies are already seeing measurable impact.

- No More Outdated Information. Traditional AI models have a “knowledge cutoff.” With RAG, your AI stays current. It can access yesterday’s reports, this morning’s updates, and even data from minutes ago.

- Goodbye, AI Hallucinations. AI hallucination happens when models make up answers. RAG fixes this by retrieving real documents and providing fact-based responses. Advanced RAG with knowledge graphs can push precision as high as 99%.

- Instant Expertise. Need an AI expert on your business? Just point RAG to your documents. No costly retraining required. Not surprisingly, more than 73% of RAG implementations are happening in large organizations.

- Better Privacy. With RAG, sensitive data stays in your environment. The AI only accesses it when needed and only for authorized users.

Building a RAG Pipeline

- Stage One: Set Up the Knowledge Base. Start with your documents—PDFs, web pages, databases. The system ingests them, breaks them into chunks, and converts them into embeddings.

- Stage Two: Process the Question. When someone asks a question, the system converts it into the same embedding format, enabling semantic matching.

- Stage Three: Find Relevant Information. The system retrieves top-matching chunks and ranks them by relevance.

- Stage Four: Generate the Answer. The retrieved information is fed into the LLM, allowing it to generate an accurate, helpful response.

Real-World RAG Pipeline Applications

RAG pipelines are transforming key industries:

- Customer Support

Thomson Reuters uses RAG to surface accurate answers for support staff. LinkedIn reduced issue resolution time by 28.6% using knowledge-graph-powered RAG. - Medical Research & Healthcare

Researchers instantly scan through thousands of medical papers. Doctors retrieve relevant cases to speed up diagnosis and improve clinical decisions. - Legal Work

Law firms use RAG to find case law, regulatory documents, and precedents in seconds. - Company Knowledge

Organizations digitize decades of documents. Employees ask questions in plain English and receive answers instantly. Many companies now identify 10+ AI use cases, powered largely by RAG. - Education

Harvard Business School built ChatLTV, a RAG chatbot that helps students access course materials instantly.

Advanced RAG Techniques

Here are some evolving techniques:

Hybrid Search – combining keyword + semantic search

Re-Ranking – refining results for precision

Query Enhancement – improving user questions before searching

Multi-modal RAG – retrieving text, images, audio, and video

Agentic RAG – AI agents performing multi-step reasoning and actions

The Future of RAG Pipelines

We’re still early in this journey. Yet adoption is accelerating. Enterprise RAG platforms like LangChain are used by Klarna, Rakuten, and Replit in production.

AI assistants will blend trained knowledge with real-time retrieval, delivering far more reliable and helpful experiences.

Instead of asking “What did this AI learn?” the future asks: “What can this AI access?”

That’s more powerful. And that’s the future.

The Bottom Line

RAG pipelines give AI the ability to research, verify, and stay current. Enterprise spending on generative AI has surged, and a large portion flows directly into RAG-based systems.

It’s more than a memory boost. It’s a complete shift in how AI works.

Next time you chat with an AI that knows recent events? A RAG pipeline is likely behind it.

Ready to Explore RAG for Your Organization?

Start by identifying your most valuable knowledge sources—internal documents, databases, customer logs, and product manuals. Then explore how RAG can make that knowledge instantly accessible.

Cenango’s AI engineering team builds secure, custom RAG solutions that integrate with your existing systems. From connecting data sources to building intuitive AI interfaces, we help you harness retrieval-augmented intelligence—safely and efficiently.

The future is retrieval-augmented. Let Cenango help you get there.

Smarter answers, better enterprise intelligence